Robbyant, an embodied AI company within Ant Group, today open-sourced LingBot-Depth, a high-precision spatial perception model designed to enhance robots’ depth sensing and 3D environmental understanding capabilities in complex real-world environments.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20260126215468/en/

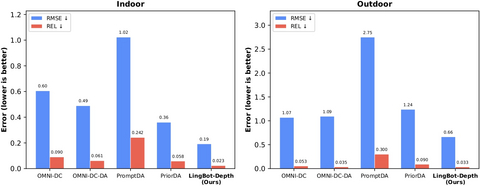

LingBot-Depth outperforms major models on the most challenging sparse depth completion task

In parallel, Robbyant announced a plan to form a strategic partnership with Orbbec, a leading provider of robotics and AI vision. As part of this collaboration, Orbbec will integrate LingBot-Depth into its next-generation depth cameras tailored for embodied intelligence applications.

In benchmark evaluations on NYUv2 and ETH3D, LingBot-Depth outperforms major models such as PromptDA and PriorDA, achieving a relative error (REL) reduction of over 70% in indoor scenes and reducing RMSE by approximately 47% on the challenging sparse Structure-from-Motion (SfM) task.

Depth sensing systems often struggle to capture reliable depth data from transparent or highly reflective surfaces, such as glass, mirrors, and polished metal, due to inherent limitations of optical physics. These surfaces frequently result in missing depth information or severe noise, causing robots to miss objects or miscalculate distances—potentially leading to operational failures or safety risks.

Robbyant developed Masked Depth Modeling (MDM) to address this industry-wide challenge. When depth inputs from a sensor are incomplete or corrupted, LingBot-Depth can analyze RGB image features, including texture, object contours, and scene context, to infer and reconstruct missing regions, producing denser, more accurate, and sharper 3D depth maps.

LingBot-Depth demonstrates strong compatibility with existing hardware, and its performance gains are achieved without requiring any changes to current sensor form factors. This underscores the value of integrating high-quality, chip-level depth data with perception algorithms specifically designed for real-world challenges.

The development of LingBot-Depth was closely supported by Orbbec, which contributed key hardware resources and technical expertise. The model was co-optimized on Orbbec’s platforms, with its performance validated by Orbbec’s Depth Vision Laboratory.

Leveraging chip-level raw depth data from Orbbec’s Gemini 330 stereo 3D cameras, LingBot-Depth intelligently reconstructs missing information, significantly improving robots’ perception robustness and task success rates in complex optical environments. Gemini 330 was also used for data collection, training, and validation, with LingBot-Depth fine-tuned on high-quality RGB-depth pairs directly output by Gemini 330 cameras.

At the heart of the Gemini 330 is Orbbec’s proprietary MX6800 depth engine chip, which combine active and passive imaging to deliver reliable 3D data across extreme lighting conditions from darkness to direct sunlight. On-device depth computation and precise sensor synchronization further reduce system latency and host compute requirements.

To train LingBot-Depth, Robbyant collected approximately 10 million raw samples and curated a high-quality dataset of 2 million RGB-depth pairs, specifically optimized for extreme and ambiguous conditions. Robbyant also plans to open-source this dataset in the near future to accelerate community-driven innovations in spatial perception for complex environments.

Zhu Xing, Chief Executive Officer of Robbyant, noted, “Reliable 3D vision is critical to the advancement of embodied AI. By open-sourcing LingBot-Depth and collaborating with hardware pioneers like Orbbec, we aim to lower the barrier to advanced spatial perception and accelerate the adoption of embodied intelligence across homes, factories, warehouses, and beyond.”

"Robbyant's work in spatial intelligence models and algorithms complements Orbbec's expertise in 3D vision chips and robotic vision systems," said Len Zhong, Head of Product Management of Orbbec. "In this collaboration, the chip-level depth data provided by the Gemini 330 delivers a stable, high-fidelity, and physically grounded data foundation for the LingBot-Depth model. It's a great example that demonstrates close coupling between a robot's sensing hardware and its perception intelligence."

Looking ahead, Robbyant plans to share its spatial perception capabilities with a broader ecosystem of hardware partners, to support the real-world deployment of intelligent robots in complex, dynamic settings.

To learn more about LingBot-Depth, please visit:

- Code: https://github.com/Robbyant/lingbot-depth

- Tech Report: https://github.com/Robbyant/lingbot-depth/blob/main/tech-report.pdf

- HuggingFace: https://huggingface.co/robbyant/lingbot-depth

About Robbyant

Robbyant is an embodied intelligence company within Ant Group, dedicated to advancing embodied intelligence through cutting-edge software and hardware technologies. Robbyant independently develops foundational large models for embodied AI and actively explores next-generation intelligent devices, aiming to create robotic companions and caregivers that truly understand and enhance people’s everyday lives and deliver reliable intelligent services across key use cases, such as elderly care, medical assistance, and household tasks.

To learn more about Robbyant, please visit: www.robbyant.com

About Orbbec

Founded in 2013, Orbbec is a leading provider of robotics and AI Vision. The company delivers full-stack 3D vision solutions spanning structured light, stereo vision, ToF, and LiDAR technologies, serving over 3,000 customers across nearly 100 countries and regions. The company continues to sharpen its focus on robotic "eye" core technology in the AI era, advancing "hand-eye-brain" integration and multi-sensor fusion perception to empower the robotics industry toward more intelligent, versatile horizons.

View source version on businesswire.com: https://www.businesswire.com/news/home/20260126215468/en/

Contact details:

Media Inquiries

Vick Li Wei

Ant Group

[email protected]